Yes, there’s numbers. Yes, there’s computer code. No, you don’t need to know how to update your feature weights. You’re an executive, feature weights don’t matter. Here’s what you do need to know.

What we know so far

This is the assumed background knowledge for this article. There’s a big fancy mysterious process, and then it outputs some sort of clever/useful/intelligent results. You incorporate the smart results into your product, and go on your merry way.

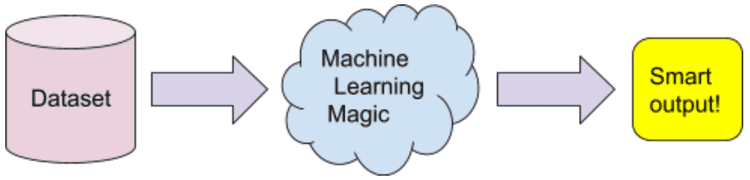

The dataset

Let’s break this down further. All machine learning needs at least one dataset. The type of smart results you get will strongly depend on this input dataset.

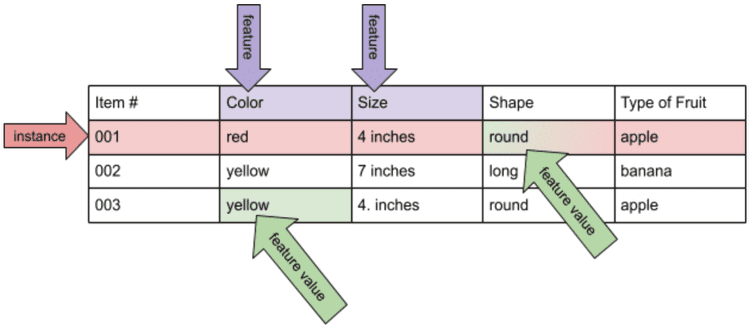

What does the dataset look like? It’s a collection of things (instances) and machine-readable descriptions (features) of those things. Here’s an example:

A machine learning dataset will typically have a minimum of several hundred instances. It might have tens of millions.

Categories of magic

Our machine learner can do two basic things with this dataset. It can measure similarities (and distances) between instances based on their feature values. This is called Unsupervised Learning. Or, you can select one feature as the “To Be Predicted” feature (class or class label), and the machine learner will figure out a pretty good way to predict a new instance’s class label based on its other feature values. This is called Supervised Learning. You may have also heard of Active Learning, Semi-supervised Learning, Anomaly Detection, Deep Learning, and Reinforcement Learning. For our purposes, these are all variations of the basic concepts from supervised learning and/or unsupervised learning.

Unsupervised learning

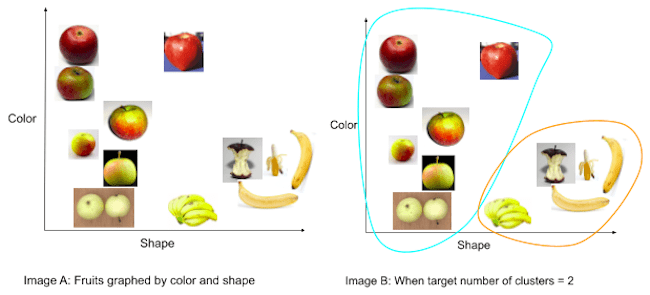

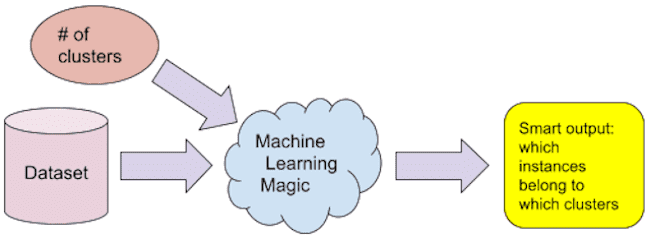

The most common form of unsupervised learning is clustering. In clustering, the user provides a dataset and either a target number of clusters or a threshold for similarity, and the machine learner figures out which instances belong to each cluster. Another common form of unsupervised learning is visualization: displaying the instances in a graph or other human-readable format for the human to learn insights about the data.

The clustering process looks like this:

Unsupervised learning is especially helpful for a human to explore a dataset and understand it better.

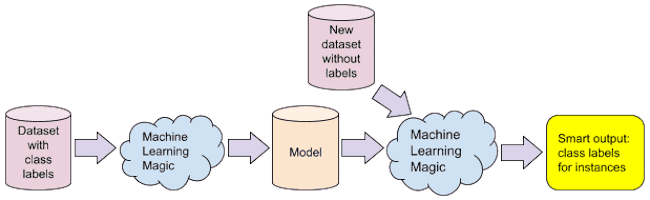

Supervised learning

Supervised learning is a two-stage process. In the first stage, a dataset is used by the machine learner to learn (train) a model. This model is a computer-readable summary of the most important parts of the data. The model can be stored for later use, which is convenient because models can take time to train. In the second stage, the machine learner, now called a machine classifier, applies the model to a new dataset to make predictions about this dataset.

Common categories of supervised machine learners include many neural networks, CNN, LSTM, Support Vector Machines, Logistic Regression, Naive Bayes, and Decision Trees. One special type of supervised machine learning is sequence learning, where the data is a sequence, such as daily weather prediction.

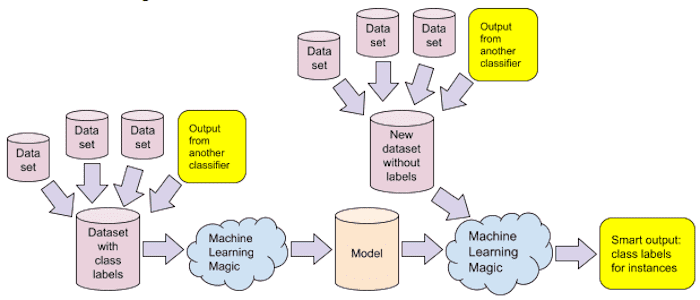

Features

Earlier, we saw that a data instance is mostly composed of a list of features. A feature can be any description of the dataset. It can be the length of an object in inches or kilometers, or the color of an object, or the color of a pixel in an image, or whether or not the object is listed in Wikipedia’s List of Pomes Fruit. It can even be the output class label of another machine classifier. For example, we can build one classifier to classify a picture of fruit as “round” or “long”, and then this class label can be used as a feature for a classifier that labels a picture of fruit as “apple” or “banana”. The “round”/”long” classifier will not be 100% accurate, but that’s ok, the output is a useful feature for the “apple”/”banana” classifier, which may learn to work around “round”/”long” inaccuracies. As a result of all these different types of features and different sources for feature knowledge, one ML dataset might be a combination of several raw datasets.

Feature engineering

Different machine learning problems require different input features. Creating effective sets of features for a particular ML problem is called feature engineering. It’s considered to be a boring task, but it’s extremely important in creating an effective machine classifier. Imagine if the features for our “apple”/”banana” classifier are (i) “Is this part of a plant?”, (ii) “Is this edible?”, and (iii) “Does it have a peel?” Our machine learner would be unable to learn a good model, and our machine classifier would be useless! Not every feature needs to be useful for a machine learner, but the more, the better. No ML algorithm is immune to the effects of useless features.

So that’s machine learning. You input certain datasets in certain places, which have a certain format, and the machine learner/classifier does certain things with the data. Your output is only as good as the input data plus the feature engineering, and is specific to a particular problem. Unsupervised ML is helpful for understanding the data, and supervised ML is helpful for teaching the machine learner to repeat a certain task without human intervention. Enjoy!